Cloudflare recently showcased in a blog post titled Use Snowflake with R2 to extend your global data lake how to connect Cloudflare R2 as an external storage using Snowflake. This allows us to load, clean, and compute data on R2, effectively turning it into a data lake. It's important to note that this feature is currently in the beta testing phase, so an authorization request needs to be submitted through a service ticket.

It is worth mentioning that Databend Cloud has been supporting Cloudflare R2 for quite some time now. As an example, blockchain data company GoldSky stores their public datasets on R2, and we can access and analyze this data using Databend Cloud. This represents a typical use case of a data lake. You can refer to the documentation from GoldSky for more information: Load data from indexedxyz.

In this post, we will perform a cost test using the dataset provided by GoldSky. Please note that this dataset is dynamically updated, so the results may vary at different points in time. The total size of the dataset is approximately 5.73 GB, consisting of over 18,000 Parquet files, with an average size of around 340 KB per file.

What is Cloueflare R2?

Cloudflare R2 is an object storage service introduced by Cloudflare, which is fully compatible with Amazon's AWS S3 service. Object storage services are used to store large amounts of unstructured data, such as images, videos, audios, documents, and more.

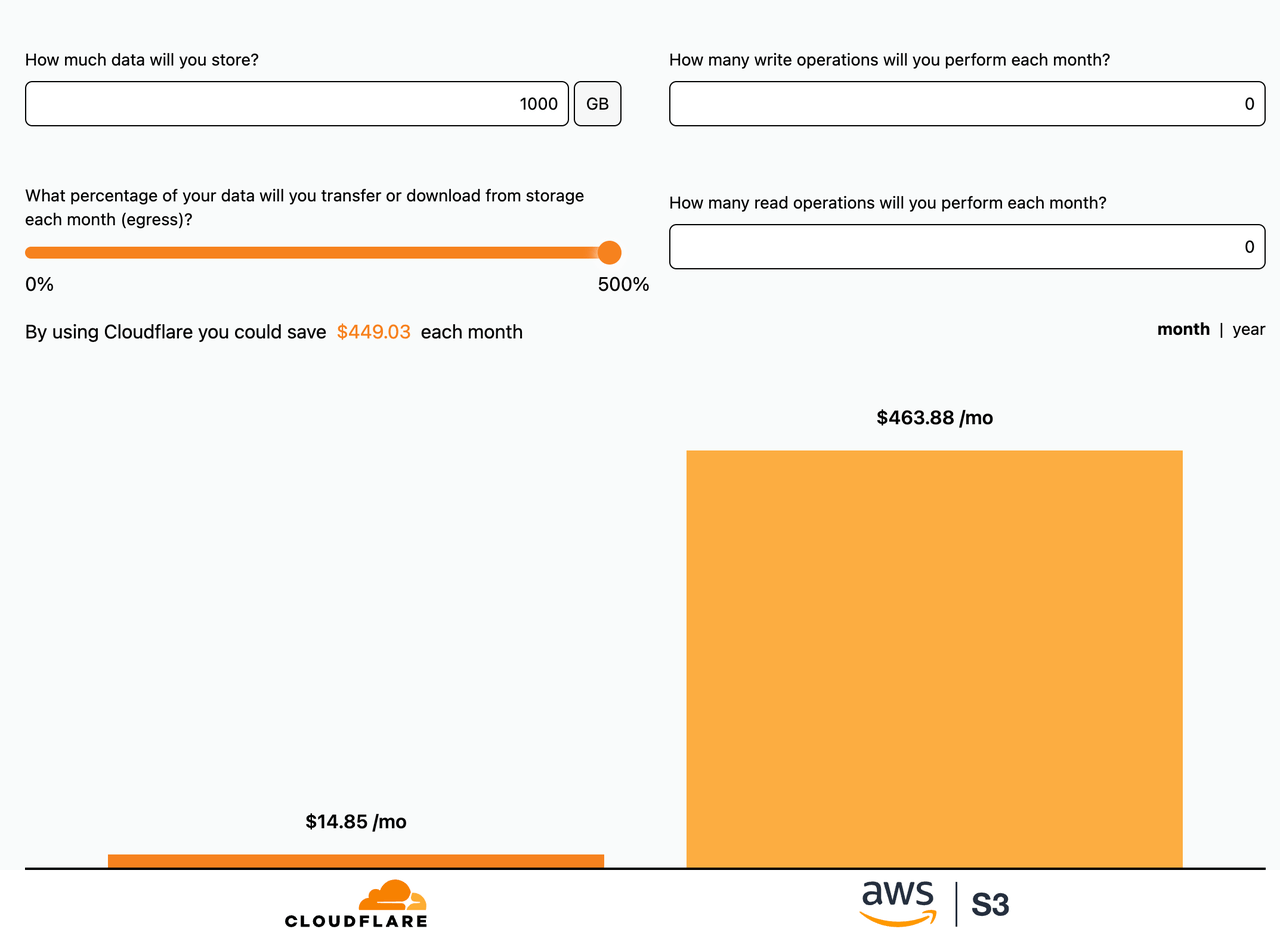

One of the main advantages of Cloudflare R2 is its cost handling for public network traffic. Typically, object storage services offer two access methods: AZ (Availability Zone) access and public network access. AZ access refers to accessing cloud services within the same region, which incurs relatively lower costs. Public network access, on the other hand, involves accessing object storage via the public internet, which can be more expensive.

However, when using Cloudflare R2, there are no data egress charges for users accessing data stored on R2 via the public network. This means that if you have a large amount of data stored on R2 and need to share or download it through the public internet, R2 can potentially save you significant costs.

For instance, let's consider a scenario where you have made 1TB of data publicly accessible on R2, and there is a monthly download of 5TB of data. By using R2 instead of AWS S3, you can save approximately $449.03 per month. You can perform a detailed cost comparison using the cost calculator website provided by Cloudflare at https://r2-calculator.cloudflare.com/.

Preparation: Creating a Stage and a Table

In Databend Cloud:

-- Create database

CREATE DATABASE indexedxyz;

USE indexedxyz;

-- Create external stage

CREATE STAGE r2_stage

URL='s3://indexed-xyz/ethereum/decoded/logs/v1.2.0/partition_key=9d/'

CONNECTION = (

REGION = 'auto'

ENDPOINT_URL = 'https://ed5d915e0259fcddb2ab1ce5592040c3.r2.cloudflarestorage.com'

ACCESS_KEY_ID = '43c31ff797ec2387177cabab6d18f15a'

SECRET_ACCESS_KEY = 'afb354f05026f2512557922974e9dd2fdb21e5c2f5cbf929b35f0645fb284cf7');

-- Create table

CREATE TABLE `contract` (

`block_time` BIGINT NULL,

`address` VARCHAR NULL,

`event_signature` VARCHAR NULL,

`event_params` ARRAY(STRING NULL) NULL,

`block_number` BIGINT NULL,

`block_hash` VARCHAR NULL,

`log_index` BIGINT NULL,

`transaction_hash` VARCHAR NULL,

`transaction_index` BIGINT NULL,

`data` VARCHAR NULL,

`topics` VARCHAR NULL,

`id` VARCHAR NULL

);

In Snowflake:

-- Create database

CREATE DATABASE indexedxyz;

USE indexedxyz;

-- Create external stage

CREATE STAGE r2_stage

REGION = 'auto'

URL = 's3compat://indexed-xyz/ethereum/decoded/logs/v1.2.0/partition_key=9d/'

ENDPOINT = 'ed5d915e0259fcddb2ab1ce5592040c3.r2.cloudflarestorage.com'

CREDENTIALS = (AWS_KEY_ID = '43c31ff797ec2387177cabab6d18f15a' AWS_SECRET_KEY ='afb354f05026f2512557922974e9dd2fdb21e5c2f5cbf929b35f0645fb284cf7');

-- Create table

CREATE TABLE `contract` (

`block_time` NUMBER(38, 0),

`address` TEXT,

`event_signature` TEXT,

`event_params` VARIANT,

`block_number` NUMBER(38, 0) ,

`block_hash` TEXT ,

`log_index` NUMBER(38, 0) ,

`transaction_hash` TEXT ,

`transaction_index` NUMBER(38, 0) ,

`data` TEXT ,

`topics` TEXT ,

`id` TEXT

);

Loading Data from R2

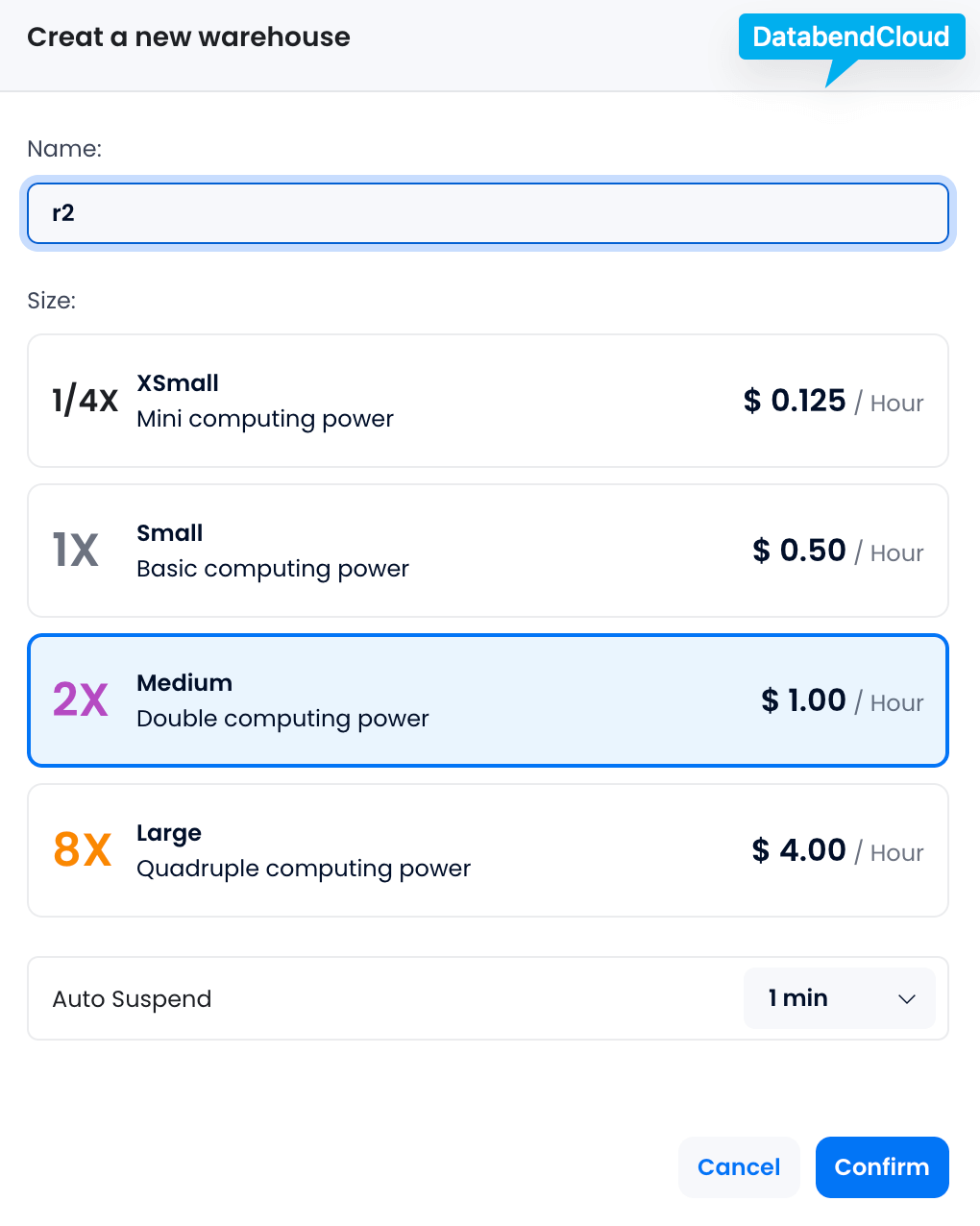

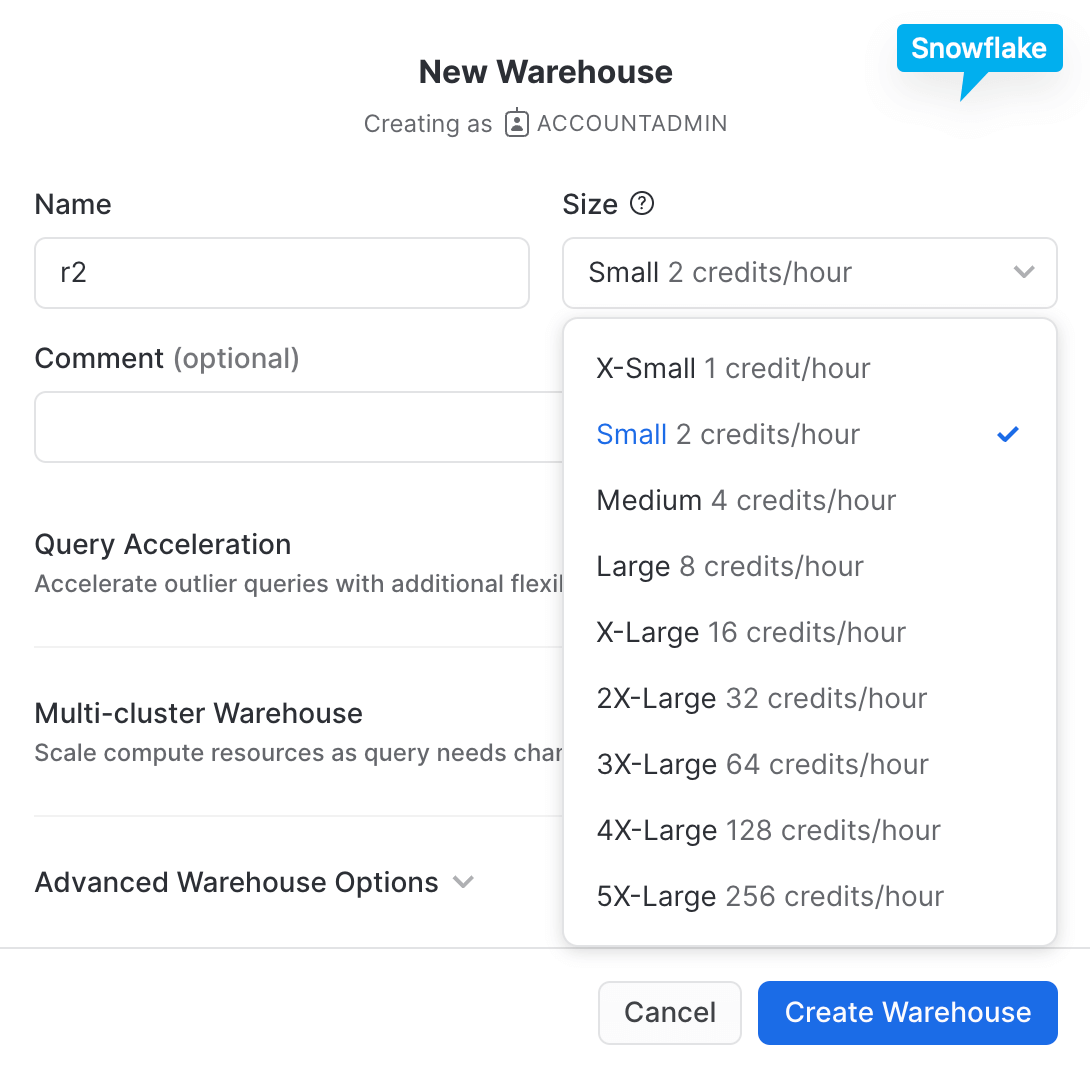

To load data, we will use the following sizes of warehouses from Databend Cloud and Snowflake:

| Warehouse Size | Pricing | |

|---|---|---|

| Snowflake | Small | $4/hour(2credits/hour) |

| Databend Cloud | Medium | $1/hour |

Loading with Databend CLoud

-- Load data from R2 to Databend Cloud

COPY INTO `contract` FROM @r2_stage FILE_FORMAT = (type = PARQUET);

-- 1m45s

Loading with Snowflake

COPY INTO `contract`

FROM (

SELECT

$1:block_time::number,

$1:address::varchar,

$1:event_signature::varchar,

$1:event_params::variant,

$1:block_number::number,

$1:block_hash::varchar,

$1:log_index::number,

$1:transaction_hash::varchar,

$1:transaction_index::number,

$1:data::varchar,

$1:topics::varchar,

$1:id::varchar

FROM @r2_stage

)

FILE_FORMAT = (type=PARQUET);

--23m 18s

Cost Comparison for Loading GoldSky Dataset

If you plan to use Cloudflare R2 as your data lake, choosing Databend Cloud as your data warehouse would be a highly cost-effective option. This can significantly reduce your computing expenses and allow you to utilize resources more efficiently.

| SQL | Size | Time(seconds) | Speed | Pricing($/hour) | Total Cost($) | |

|---|---|---|---|---|---|---|

| Snowflake | COPY | 5.73GB | 23m 18s | 4.20MB/s | Small, $4/hour | 0.78$ |

| Databend Cloud | COPY | 5.73GB | 1m 45s | 55.88MB/s | Medium, $1/hour | 0.03$ |

Computing Cost Analysis

Q1:

SELECT COUNT(*)

FROM `contract`

WHERE `address` = '0xbc4ca0eda7647a8ab7c2061c2e118a18a936f13d'

AND `event_signature` = 'Transfer(address,address,uint256)';

Q2:

SELECT

date_trunc('month', to_timestamp(block_time)),

COUNT(*)

FROM

`contract`

WHERE

address = '0xbc4ca0eda7647a8ab7c2061c2e118a18a936f13d'

AND event_signature = 'Transfer(address,address,uint256)'

GROUP BY

1;

For Q1, Snowflake costs 4.2 times more than Databend Cloud:

| Q1 Hot-Run(Data Cache) | Pricing | |

|---|---|---|

| Snowflake | 172ms | Small, $4/hour |

| Databend | 164ms | Medium, $1/hour |

For Q2, Snowflake costs 2.6 times more than Databend Cloud:

| Q2 Hot-Run(Data Cache) | Pricing | |

|---|---|---|

| Snowflake | 123ms | Small, $4/hour |

| Databend | 188ms | Medium, $1/hour |

In Q1 query, Snowflake has a runtime of 172 milliseconds with a cost of $4 per hour, while Databend Cloud has a slightly faster runtime of 164 milliseconds and a lower cost of $1 per hour. This indicates that in the Q1 query, the cost of using Databend Cloud is only 1/4.2 of Snowflake's cost.

In Q2 query, Snowflake has a runtime of 123 milliseconds with a cost of $4 per hour, while Databend Cloud has a longer runtime of 188 milliseconds. However, Databend Cloud still maintains a lower cost of $1 per hour. This indicates that in the Q2 query, the cost of using Databend Cloud is only 1/2.6 of Snowflake's cost.

Although there may be slight performance differences between Snowflake and Databend Cloud, Databend Cloud clearly outperforms Snowflake in terms of cost-effectiveness.

Summary

Cloudflare R2 is an object storage service that is fully compatible with AWS S3. One of its standout features is the absence of any charges for accessing data over the public internet, making it an excellent choice for a data lake.

In this regard, Databend Cloud shines, especially when dealing with the public internet access mode of Cloudflare R2. Not only does it offer significantly lower pricing compared to Snowflake, but it also provides optimizations specifically tailored for R2. For example, Databend Cloud employs batch reading strategies for small Parquet files, which is crucial in the public internet access mode to reduce network requests and improve read efficiency. Additionally, Databend leverages extensive parallel processing for Parquet files, further enhancing data retrieval speed.

For users utilizing Cloudflare R2 as their data lake, opting for Databend Cloud as the computing platform proves to be a more cost-effective choice. With its lower costs, comparable performance to Snowflake, and optimizations targeted at R2, Databend Cloud aligns well with the features and advantages of Cloudflare R2.