Data Agent Ready Database: Designing the Next-Gen Enterprise Data Warehouse

DatabendLabsFeb 2, 2026

DatabendLabsFeb 2, 2026

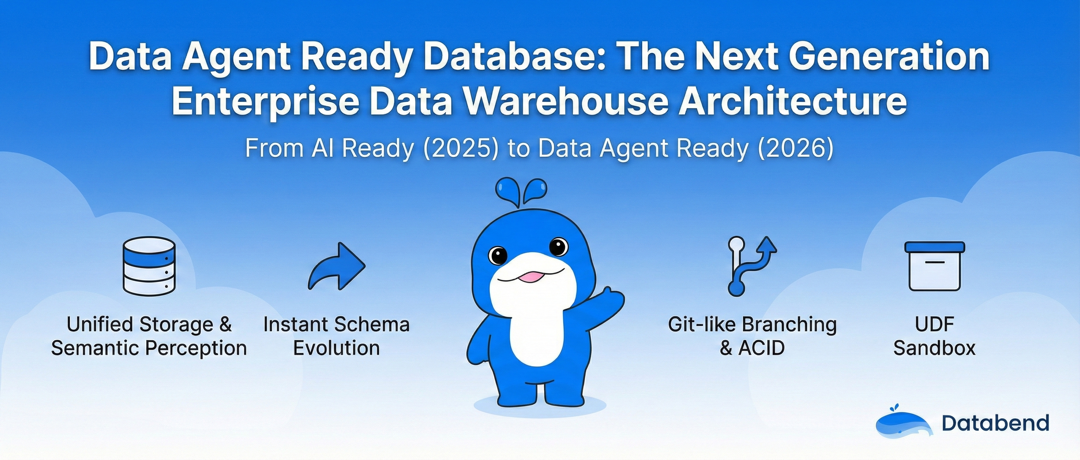

2025 marked the era of AI Ready databases—vector search and AI functions went from novel features to industry standard. In 2026, a new trend is emerging: Data Agent Ready.

With the rise of coding agents like Cursor and Claude Code, and the proliferation of data analysis agents, more database operations are being handled by AI. But enterprise environments are fundamentally different from personal experiments: Data Agents must deal with massive datasets and complex business logic. When the database user shifts from human to agent, the design philosophy must evolve accordingly.

OpenAI shared their experience in Inside OpenAI's in-house data agent: managing 600 PB of data across 70,000 datasets, they found that "simply finding the right table can be one of the most time-consuming parts of doing analysis." Their core insight: Context is everything—without sufficient context, even the most powerful models produce incorrect results.

From a Data Agent's perspective, the ideal database architecture should possess the following core traits.

Unified Storage with Semantic Awareness

Enterprise data is typically scattered across multiple systems: orders in OLTP databases, logs in Elasticsearch, spatial data in GIS systems, embeddings in vector databases, files in object storage. This fragmentation creates significant complexity for Data Agents—simply locating the right table can consume considerable time.

The first priority is unifying data access.

Build on object storage (like S3) as the foundation, with a unified interface for managing structured, semi-structured, and unstructured data. Data Agents need only a single SQL entry point to access all data resources.

But access alone isn't enough. Agents need not just to find data, but to understand its meaning—field semantics, lineage relationships between tables, update frequencies, and applicable scope. Without this context, even the most powerful models can produce incorrect results.

The database should provide a rich metadata layer: schema information, table lineage, column annotations, historical query patterns. This enables Data Agents to build deep understanding before executing queries. Runtime schema validation ensures reasoning is based on current ground truth.

Instant Schema Evolution

Schema changes in traditional databases are expensive operations. Adding a column to a large table may require prolonged table locks and data rewrites. Yet Data Agents performing data exploration and automated processing need to adjust schemas frequently.

Schema changes must be instantaneous.

Through metadata versioning (Schema Versioning), column additions and deletions modify only metadata, leaving underlying data files untouched. Read-on-write techniques handle version compatibility automatically. Regardless of data volume, schema changes complete instantly. This allows Data Agents to iterate on data models quickly without worrying about change costs.

Zero-Overhead Git-like Branching

Giving production write access directly to a Data Agent poses significant security risks. If an agent "hallucinates" and accidentally deletes or modifies data, the consequences could be severe.

The solution is to manage data like code: introduce branching.

Zero-Copy Snapshot technology makes branch creation virtually free—just creating a logical pointer, with no data duplication. All exploratory operations by Data Agents occur on isolated

dev

feature

main

Transparency is equally important. Every agent action should be traceable—what queries were executed, what data was modified, what assumptions drove the decisions. This transparency allows humans to review the agent's reasoning process, verify result correctness, and intervene when necessary.

-- Create a dev branch

ALTER TABLE orders CREATE BRANCH dev;

-- Data Agent explores on the branch without affecting production

SELECT * FROM orders/dev;

-- Branch from a specific point in time

ALTER TABLE orders CREATE BRANCH feature_branch

AT (TIMESTAMP => '2026-01-27 10:00:00');

Robust Transactions

Data Agent operations rarely consist of a single SQL statement. A typical workflow might include: reading from source tables, executing transformations, writing to intermediate tables, updating target tables—coordinated operations across multiple tables.

If one step fails while previous steps have already committed, the data ends up in an inconsistent state.

Multi-statement transaction support is essential.

Wrap multiple operations in a transaction: either all succeed and commit, or all roll back. This atomicity guarantee means Data Agents don't have to worry about data corruption from mid-workflow failures.

Going further, agents can leverage transaction rollback for self-correction—automatically rolling back on errors and retrying with adjusted strategies. Combined with a memory system, lessons from each correction are saved, avoiding repeated mistakes in future operations.

UDF Sandbox

Data Agents do more than query data—they process it. Calling LLM APIs, running complex Python logic, performing data cleaning and analytics—these are high-frequency needs.

A built-in UDF Sandbox directly addresses these requirements.

This design offers three core advantages:

- Push code to data: Execute AI-generated Python code where the data resides, eliminating network overhead from large-scale data movement.

- Fault isolation: UDFs run in isolated sandboxes, so even if code crashes, the database's main process remains stable.

- Version control: UDF code is version-controlled, ensuring computation logic is traceable and reproducible, enabling A/B testing and iterative optimization.

Extreme Stability

Human user queries typically follow predictable patterns that DBAs can optimize for. But Data Agent requests are entirely unpredictable, with query complexity varying dramatically, all while demanding maximum execution speed.

This "unpredictable, high-concurrency, speed-hungry" workload pattern places higher demands on database stability. Traditional data warehouses often respond to abnormal loads by tuning parameters or restarting services. But in enterprise-scale scenarios, a single restart might mean hours of downtime.

Zero-tuning stability is essential:

- Adaptive resource management: dynamically adjust memory and compute resources based on load, preventing OOM crashes

- Query circuit-breaking: intelligently throttle resource-intensive queries to protect overall availability

- Hot configuration updates: adjust critical parameters online without downtime

Stability is the foundation for Data Agents to run 24/7.

The Evolution of Databend

Databend, a cloud-native data warehouse built entirely on object storage, is evolving in this direction:

- Unified storage with semantic awareness: Native Parquet support, unified storage for structured, semi-structured, vector, and geospatial data. Built-in Audit Trail system provides comprehensive query_history and access_history records, helping agents understand table usage patterns and data meaning.

- Instant Schema Evolution: Rust storage engine implements complete metadata versioning, with instant Add/Drop Column operations.

- Zero-overhead branching: Zero-Copy Snapshot technology enables lightweight branching and tag management, with full audit trails.

- Robust transaction support: ACID multi-statement transactions ensuring atomicity and consistency for multi-step operations.

- UDF Sandbox (coming soon): Sandboxed Python UDFs with isolation and auto-scaling.

- Extreme stability: Multi-Cluster architecture with automatic Scale In/Out based on load, works optimally out of the box with no parameter tuning required.

Get started today

Databend Cloud — the agent-ready data warehouse for analytics, search, AI, and Python Sandbox. Start in minutes and get $200 in free credits.

Subscribe to our newsletter

Stay informed on feature releases, product roadmap, support, and cloud offerings!